Liquid AI has just launched a series of language models called Liquid Foundation Models (LFMs).

These new LFMs deliver impressive performance that it outperform some of the best large language models available today.

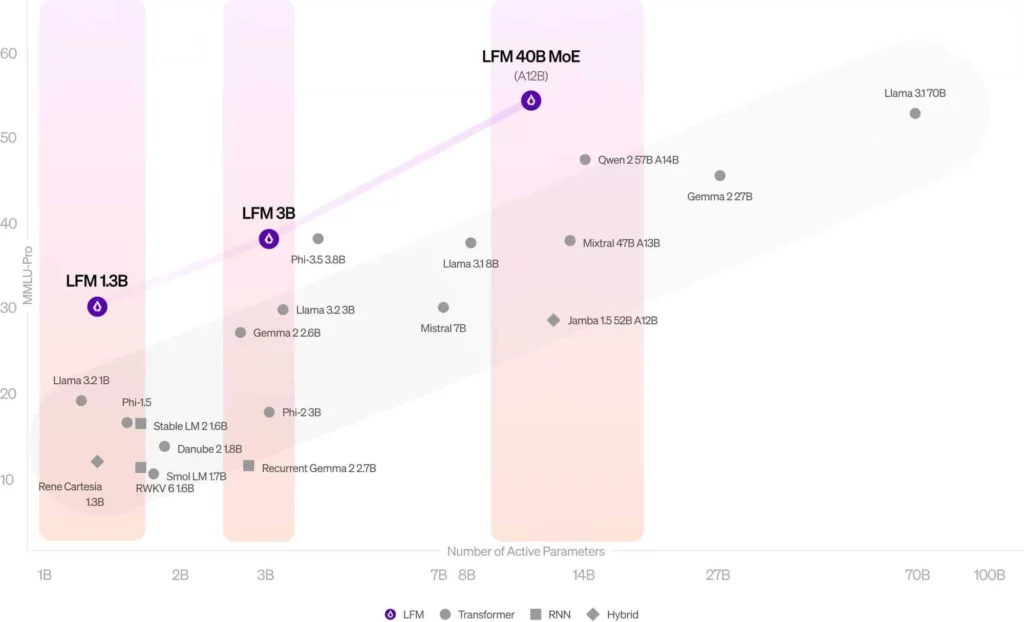

These models, ranging from the compact LFM-1B to the powerful LFM-40B, are not only smaller in size but also more efficient.

- The smallest, LFM-1B, with 1.3 billion parameters, is setting new standards by outperforming larger models in its category.

- Meanwhile, LFM-3B is tailored for use in mobile devices, offering high performance in a smaller package.

- The largest, LFM-40B, uses a unique architecture that activates only the necessary parts for each task, making it as effective as models with many more parameters but at a lower cost.

What makes these models special is their ability to handle long texts without consuming large memory, a common issue with other models. This means they can understand and generate longer documents or conversations more efficiently.

Liquid AI is making these models accessible through various platforms like Liquid Playground and Perplexity Labs.

While these models show great promise with their innovative design and impressive benchmark results, they’re still new to the scene.

They have some limitations, like struggling with coding tasks or real-time data handling.

However, as they begin to be used in real-world applications, LFMs could very well shape the future of AI interactions, offering smarter and more efficient tools for users.

Keep an eye on this space; the impact of LFMs could be significant as they evolve.